You don’t have to dig too deeply into the archive of dystopian science fiction to uncover the horror that intelligent machines might unleash. The Matrix and The Terminator are probably the most well-known examples of self-replicating, intelligent machines attempting to enslave or destroy humanity in the process of building a brave new digital world.

The prospect of artificially intelligent machines creating other artificially intelligent machines took a big step forward in 2017. However, we’re far from the runaway technological singularity futurists are predicting by mid-century or earlier, let alone murderous cyborgs or AI avatar assassins.

The first big boost this year came from Google. The tech giant announced it was developing automated machine learning (AutoML), writing algorithms that can do some of the heavy lifting by identifying the right neural networks for a specific job. Now researchers at the Department of Energy’s Oak Ridge National Laboratory (ORNL), using the most powerful supercomputer in the US, have developed an AI system that can generate neural networks as good if not better than any developed by a human in less than a day.

It can take months for the brainiest, best-paid data scientists to develop deep learning software, which sends data through a complex web of mathematical algorithms. The system is modeled after the human brain and known as an artificial neural network. Even Google’s AutoML took weeks to design a superior image recognition system, one of the more standard operations for AI systems today.

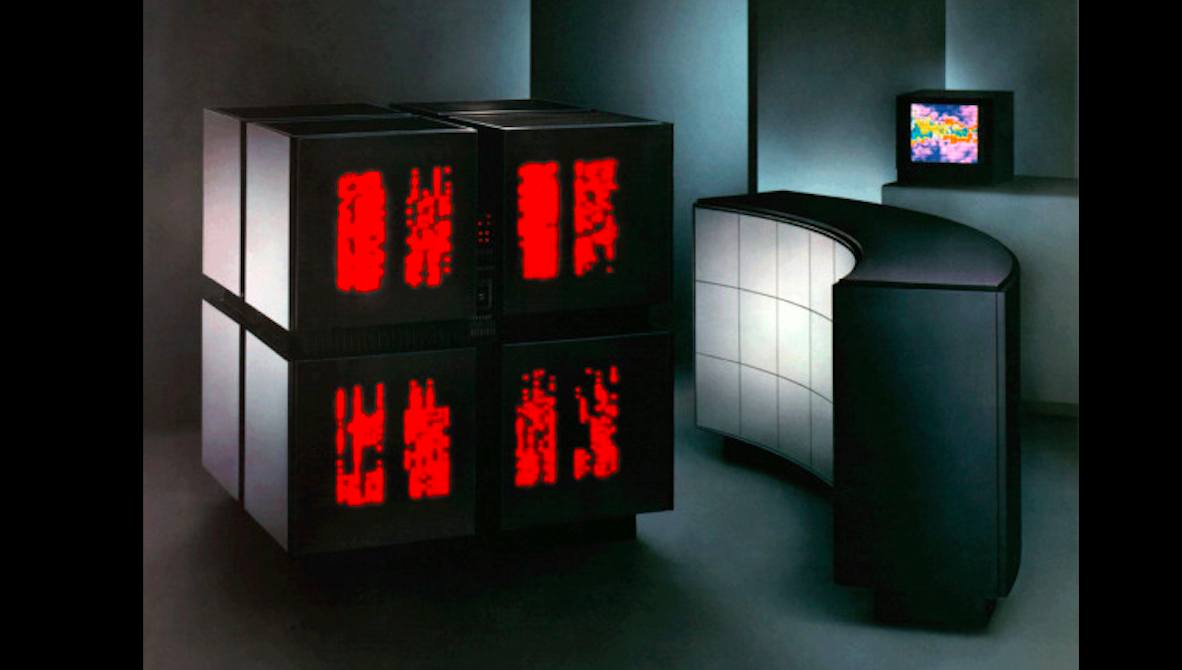

Computing Power

Of course, Google Brain project engineers only had access to 800 graphic processing units (GPUs), a type of computer hardware that works especially well for deep learning. Nvidia, which pioneered the development of GPUs, is considered the gold standard in today’s AI hardware architecture. Titan, the supercomputer at ORNL, boasts more than 18,000 GPUs.

The ORNL research team’s algorithm, called MENNDL for Multinode Evolutionary Neural Networks for Deep Learning, isn’t designed to create AI systems that cull cute cat photos from the internet. Instead, MENNDL is a tool for testing and training thousands of potential neural networks to work on unique science problems.

That requires a different approach from the Google and Facebook AI platforms of the world, notes Steven Young, a postdoctoral research associate at ORNL who is on the team that designed MENNDL.

“We’ve discovered that those [neural networks] are very often not the optimal network for a lot of our problems, because our data, while it can be thought of as images, is different,” he explains to Singularity Hub. “These images, and the problems, have very different characteristics from object detection.”

AI for Science

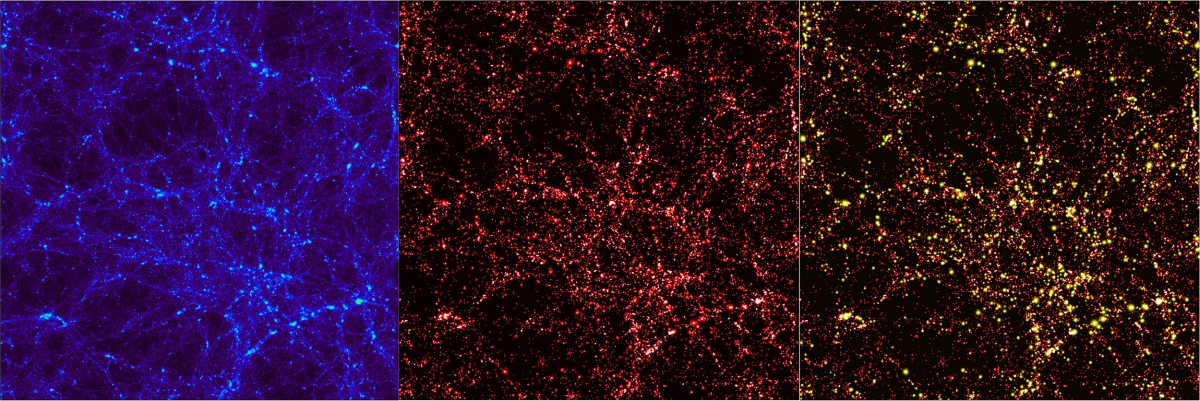

One application of the technology involved a particle physics experiment at the Fermi National Accelerator Laboratory. Fermilab researchers are interested in understanding neutrinos, high-energy subatomic particles that rarely interact with normal matter but could be a key to understanding the early formation of the universe. One Fermilab experiment involves taking a sort of “snapshot” of neutrino interactions.

The team wanted the help of an AI system that could analyze and classify Fermilab’s detector data. MENNDL evaluated 500,000 neural networks in 24 hours. Its final solution proved superior to custom models developed by human scientists.

In another case involving a collaboration with St. Jude Children’s Research Hospital in Memphis, MENNDL improved the error rate of a human-designed algorithm for identifying mitochondria inside 3D electron microscopy images of brain tissue by 30 percent.

“We are able to do better than humans in a fraction of the time at designing networks for these sort of very different datasets that we’re interested in,” Young says.

What makes MENNDL particularly adept is its ability to define the best or most optimal hyperparameters—the key variables—to tackle a particular dataset.

“You don’t always need a big, huge deep network. Sometimes you just need a small network with the right hyperparameters,” Young says.

A Virtual Data Scientist

That’s not dissimilar to the approach of a company called H20.ai, a startup out of Silicon Valley that uses open source machine learning platforms to “democratize” AI. It applies machine learning to create business solutions for Fortune 500 companies, including some of the world’s biggest banks and healthcare companies.

“Our software is more [about] pattern detection, let’s say anti-money laundering or fraud detection or which customer is most likely to churn,” Dr. Arno Candel, chief technology officer at H2O.ai, tells Singularity Hub. “And that kind of insight-generating software is what we call AI here.”

The company’s latest product, Driverless AI, promises to deliver the data scientist equivalent of a chessmaster to its customers (the company claims several such grandmasters in its employ and advisory board). In other words, the system can analyze a raw dataset and, like MENNDL, automatically identify what features should be included in the computer model to make the most of the data based on the best “chess moves” of its grandmasters.

“So we’re using those algorithms, but we’re giving them the human insights from those data scientists, and we automate their thinking,” he explains. “So we created a virtual data scientist that is relentless at trying these ideas.”

Inside the Black Box

Not unlike how the human brain reaches a conclusion, it’s not always possible to understand how a machine, despite being designed by humans, reaches its own solutions. The lack of transparency is often referred to as the AI “black box.” Experts like Young say we can learn something about the evolutionary process of machine learning by generating millions of neural networks and seeing what works well and what doesn’t.

“You’re never going to be able to completely explain what happened, but maybe we can better explain it than we currently can today,” Young says.

Transparency is built into the “thought process” of each particular model generated by Driverless AI, according to Candel.

The computer even explains itself to the user in plain English at each decision point. There is also real-time feedback that allows users to prioritize features, or parameters, to see how the changes improve the accuracy of the model. For example, the system may include data from people in the same zip code as it creates a model to describe customer turnover.

“That’s one of the advantages of our automatic feature engineering: it’s basically mimicking human thinking,” Candel says. “It’s not just neural nets that magically come up with some kind of number, but we’re trying to make it statistically significant.”

Moving Forward

Much digital ink has been spilled over the dearth of skilled data scientists, so automating certain design aspects for developing artificial neural networks makes sense. Experts agree that automation alone won’t solve that particular problem. However, it will free computer scientists to tackle more difficult issues, such as parsing the inherent biases that exist within the data used by machine learning today.

“I think the world has an opportunity to focus more on the meaning of things and not on the laborious tasks of just fitting a model and finding the best features to make that model,” Candel notes. “By automating, we are pushing the burden back for the data scientists to actually do something more meaningful, which is think about the problem and see how you can address it differently to make an even bigger impact.”

The team at ORNL expects it can also make bigger impacts beginning next year when the lab’s next supercomputer, Summit, comes online. While Summit will boast only 4,600 nodes, it will sport the latest and greatest GPU technology from Nvidia and CPUs from IBM. That means it will deliver more than five times the computational performance of Titan, the world’s fifth-most powerful supercomputer today.

“We’ll be able to look at much larger problems on Summit than we were able to with Titan and hopefully get to a solution much faster,” Young says.

It’s all in a day’s work.

Image Credit: Gennady Danilkin / Shutterstock.com

Thank you in advance for helping us to continue to be a part of your online entertainment!

Thank you in advance for helping us to continue to be a part of your online entertainment!

Check out our Flipboard magazine ESIST If you enjoy reading our posts. All of our handpicked articles will be delivered to the flipboard magazine every day.

This article was originally posted on The Verge

by James Vincent