AI research has a long history of repurposing old ideas that have gone out of style. Now researchers at Elon Musk’s open source AI project have revisited “neuroevolution,” a field that has been around since the 1980s, and achieved state-of-the-art results.

The group, led by OpenAI’s research director Ilya Sutskever, has been exploring the use of a subset of algorithms from this field, called “evolution strategies,” which are aimed at solving optimization problems.

Despite the name, the approach is only loosely linked to biological evolution, the researchers say in a blog post announcing their results. On an abstract level, it relies on allowing successful individuals to pass on their characteristics to future generations. The researchers have taken these algorithms and reworked them to work better with deep neural networks and run on large-scale distributed computing systems.

“To validate their effectiveness, they then set them to work on a series of challenges seen as benchmarks for reinforcement learning.”

To validate their effectiveness, they then set them to work on a series of challenges seen as benchmarks for reinforcement learning, the technique behind many of Google DeepMind’s most impressive feats, including beating a champion Go player last year.

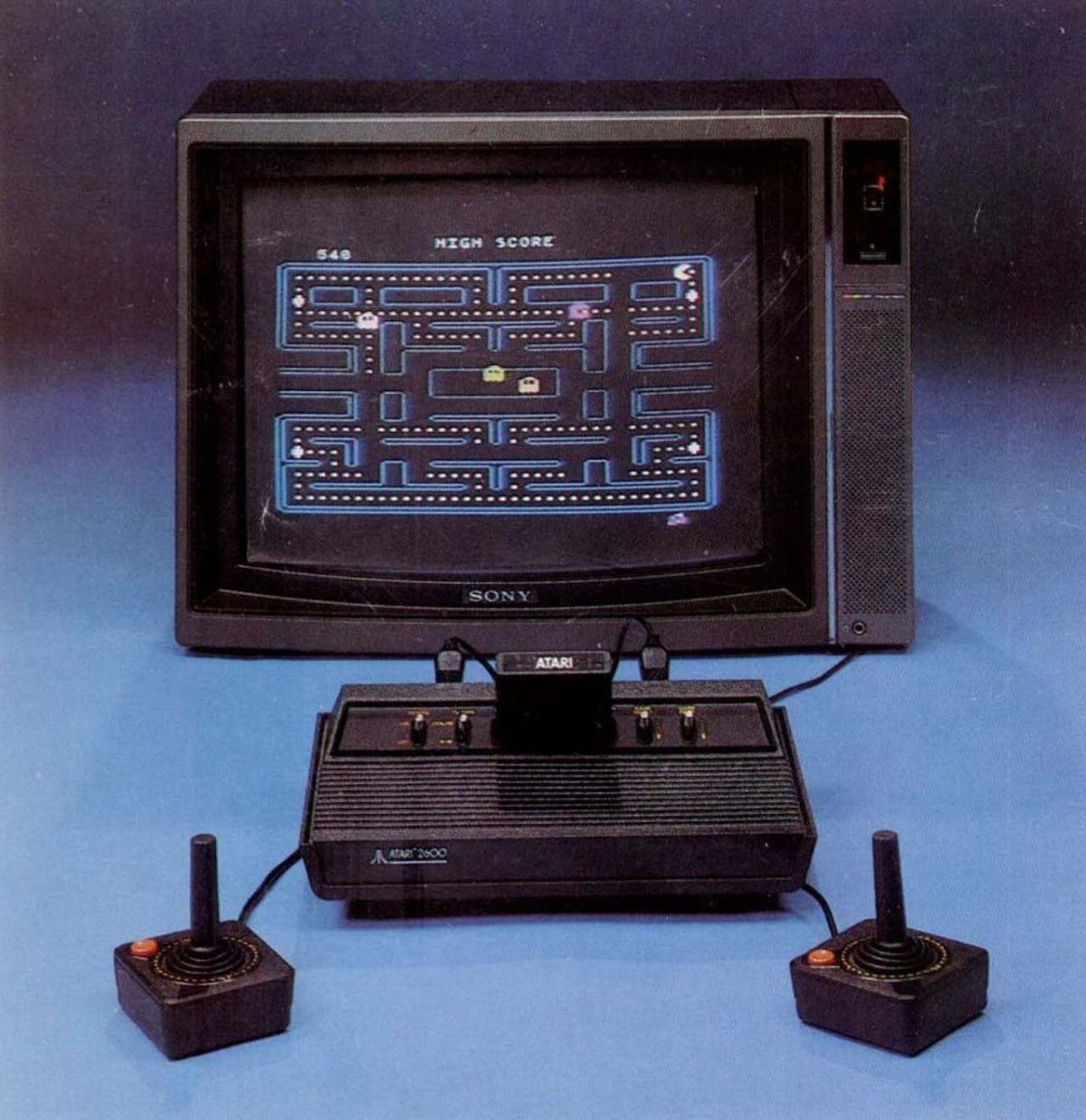

One of these challenges is to train the algorithm to play a variety of computer games developed by Atari. DeepMind made the news in 2013 when it showed it could use Deep Q-Learning—a combination of reinforcement learning and convolutional neural networks—to successfully tackle seven such games. The other is to get an algorithm to learn how to control a virtual humanoid walker in a physics engine.

To do this, the algorithm starts with a random policy—the set of rules that govern how the system should behave to get a high score in an Atari game, for example. It then creates several hundred copies of the policy—with some random variation—and these are tested on the game.

These policies are then mixed back together again, but with greater weight given to the policies that got the highest score in the game. The process repeats until the system comes up with a policy that can play the game well.

“In one hour training on the Atari challenge, the algorithm reached a level of mastery that took a [DeepMind] reinforcement-learning system…a whole day to learn.”

In one hour training on the Atari challenge, the algorithm reached a level of mastery that took a reinforcement-learning system published by DeepMind last year a whole day to learn. On the walking problem the system took 10 minutes, compared to 10 hours for Google’s approach.

One of the keys to this dramatic performance was the fact that the approach is highly “parallelizable.” To solve the walking simulation, they spread computations over 1,440 CPU cores, while in the Atari challenge they used 720.

This is possible because it requires limited communication between the various “worker” algorithms testing the candidate policies. Scaling reinforcement algorithms like the one from DeepMind in the same way is challenging because there needs to be much more communication, the researchers say.

The approach also doesn’t require backpropagation, a common technique in neural network-based approaches, including deep reinforcement learning. This effectively compares the network’s output with the desired output and then feeds the resulting information back into the network to help optimize it.

The researchers say this makes the code shorter and the algorithm between two and three times faster in practice. They also suggest it will be particularly suited to longer challenges and situations where actions have long-lasting effects that may not become apparent until many steps down the line.

The approach does have its limitations, though. These kinds of algorithms are usually compared based on their data efficiency—the number of iterations required to achieve a specific score in a game, for example. On this metric, the OpenAI approach does worse than reinforcement learning approaches, although this is offset by the fact that it is highly parallelizable and so can carry out iterations more quickly.

For supervised learning problems like image classification and speech recognition, which currently have the most real-world applications, the approach can also be as much as 1,000 times slower than other approaches that use backpropagation.

Nevertheless, the work demonstrates promising new applications for out-of-style evolutionary approaches, and OpenAI is not the only group investigating them. Google has been experimenting on using similar strategies to devise better image recognition algorithms. Whether this represents the next evolution in AI we will have to wait and see.

Image Credit: Shutterstock

__

Join our fans by liking us on Facebook, or follow us on Twitter, Google+, feedly, flipboardand Instagram.

Check out our Flipboard magazine ESIST If you enjoy reading our posts. All of our handpicked articles will be delivered to the flipboard magazine every day.

This article and images was originally posted on Singularity Hub

Thank you in advance for helping us to continue to be a part of your online entertainment!

Thank you in advance for helping us to continue to be a part of your online entertainment!